Microsoft AzureAD and Office365 - Not secure by default

Microsoft AzureAD and Office365 - Not secure by default

Or 3 clicks and you are dead

Cloud Identity is a great thing these days. You can sign up to lots of services using your social identity from Facebook, Google, or from Microsoft. You do not have to invent new passwords (or reuse passwords used elsewhere), and you get easy Single Sign On. This has more advantages than drawbacks for by far the majority of users.The purpose of this article is to create awareness of some of the dangers.

Microsoft AzureAD - The special case

Your Microsoft company identity is just another cloud identity for most services, and in many ways it is better than some of the others. You get secure multi-factor logon, you might even get passwordless logon. Conditional Access to protect your Sign in attempts even more, maybe blocking countries like China, Russia and Brazil, or doing it risk based. It all depends an how the enterprise has configured things.It is trivial to create your own web server, which will get a social logon using Microsoft identities. Microsoft claims it takes 5 minutes, and this is true. Everybody with an e-mail address can create a Microsoft account, and register an app with Azure that allows you to Sign in users with their Microsoft identity.

The website developer uses the nice "Sign on with Microsoft" button downloaded from Microsoft, and Microsoft handles the sign on, with all the extra security etc, and ask for user consent to pass data on to the Azure registered app.

This is great, it opens up for lots of developers to create apps that can help the user work with the data he has in Office365. Or in many cases, the enterprise owned data in Office365, but to which the user has been granted access.

One great example is the https://draw.io/ web application. It allows you to read or save your drawings directly to a local drive, OneDrive or Google Drive. So you can work on the same document from your phone/ipad or PC. Draw.io need user consent to Read/write files on the user's OneDrive, to do so.

What is the problem ?

The problem is, that by default, user consent is permitted for all enterprise users. There are a few permissions that require the consent of an Administrator, but by default the user can give any 3rd party (friendly, hostile or malicious) full read-write permissions to most of the data he can reach in Office365. draw.io could abuse the user consent to copy all the users files down to to their webservers if they saw a benefit in doing so.A web app can ask for permissions to read-write OneDrive, SharePoint, Mail, Calendar, Contacts, Teams etc, and get offline-access. That means, that whenever the web app wants, it can access these services by using an access token that was handed over to it, by Microsoft, as a result of the user logon and consent. It can even ask for a list of all user identities in the directory, including all those secret Admin accounts with no email address.

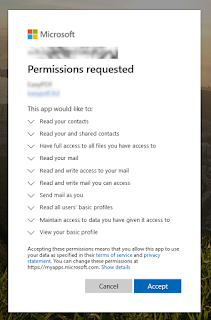

The user does not see or realize what he gives consent to when pressing the big blue Accept button. None of our interviewed users did that. Some remembered pressing the blue Continue / OK button but that is all. Most never remembered the consents they had given even a few days or weeks ago. Some users remembered they tried the app, but stopped using it, maybe uninstalled it. They did not realize that the app still had permissions to their data in Office365.

The user identity security evaluates the logon by the user at his PC or phone. It is the PC or phone that is subject to scrutiny like Conditional Access, Geo blocking, Multifactor logon etc. The web application that gets the access token can use that token everywhere, and forever. At some point the token might become invalid, and the web app would need to have the user do the Single Sign On again with the Microsoft identity - But consent is not asked again before the app wants more access.

So even if the enterprise implemented strict Conditional Access, blocked China, Russia and Brazil for the user, the web app can still use the token from those blocked countries. There is NO Conditional Access or added security on logins using an access token. Tokens work more or less like the app-passwords typically used by mail applications in Multi Factor Authentication scenarios.

The enterprise do get an entry stamped in Azure AD log, that this application has logged on. If the enterprise has bought some additional licenses, like Azure AD P2, they can see that user x has writtten file Y. But if it is a web app doing so, the IP address in the logs is that of the Microsoft Graph API servers calling OneDrive, Sharepoint etc. And it is not traceable to the web app.

When my test web app uploaded the EICAR virus sample to OneDrive using GraphAPI, it did so with no problems. Malware was not detected by Microsoft. But it was detected by my local PC Anti-virus client when I accessed the file from my OneDrive folder. But tracing who used the users credentials / token to upload it was not possible. The event from Microsoft Cloud App Security is below

How can this be exploited ?

There is no vetting process for web apps like there is for the Apple App Store or Play Store apps. Everybody who can get an e-mail address can create a web app, and start asking users for consent.If the actor is long term malicious, he can deliver a service of value, but ask for more permissions than needed, and abuse that to read data from OneDrive, mailboxes and sharepoint sites. Or just come up with an explanation for why he needs wide permissions.

He can extract data, or infect trusted files on employees' OneDrive. And he can put a document on SharePoint sites telling people to actually run the embedded malicious code, or visit a malicious link.

It only takes a user 3 clicks

- One click on a login button or a link in an e-mail pointing to microsoft (Link starting with https://login.microsoftonline.com/common/oauth2/v2.0/authorize? ),

- One click on your username on the Microsoft webpage, then maybe some MFA/Conditional Access/Login or just Single Sign On as you are used to

- One click on the big blue button on the consent page, also presented by Microsoft.

All clicks are on Microsoft links.

3 clicks and you are dead. Password phising is just so old-school, as phised credential are subject to additional security when used for logon. Tokens can be used with no additional security checks.

Why did Microsoft make it so easy ?

Microsoft likely made it so easy for users so as to help grow a market of software products using Microsoft Office365 (The Office365 ecosystem), to give users and enterprises access to lots of additional functionality that would tie the enterprise closer to Microsoft. By all this easy access, they have created some sort of uncontrolled external app store for Office365. Web apps working on the employees enterprise data in the Office365 cloud.The decision NOT to require Administrator approval per default for every single app accessing company Office365 data is likely a very conscious decision, as doing so would have killed the Office365 web app market before it even started taking off. And they still have the excuse, that the user has given consent. None of the interviewed users knew they had given consent, so the question is how much is the consent worth ? In some countries it is worth nothing. Especially since Microsoft knows the data belongs to the paying enterprise.

There is the possibility, that everybody just thought that it should be easy for the developers, and nobody thought of the potential abuse by bad actors. But since the Cloud App Security portal do have a button to report malicious web apps, the thought of malicious web apps has certainly crossed the minds at Microsoft, but they decided to let customers run the risk, keeping them in the dark.

So giving the user an easy way to hand over company data he clearly does not own, to parties he might not trust, can only have been made for short term commercial reasons at management level. I am sure the developers have tried to raise concerns, if Microsoft company culture allows it.

Surely this is not security by default, as required in EU by GDPR. The option to make it more secure is there, but it is not default. It would slow down the ecosystem growth, and thus economic growth.

And the tools to look into it is another steep fee to Microsoft.

Does this reach further ?

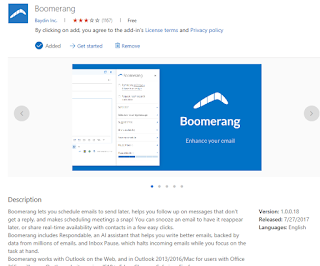

Yes it does.In Outlook, I have a button named "Get Add-ins". That will send me to the Office store, where I can download smart plugins for the Office Suite. We have some users using an email productivity suite called "Boomerang" by Bayed Inc. It is in the Office store, so our users of course thought it was safe to install, otherwise they expected us to do something to prevent it. I do not know the company or the product, so I can not judge if it is good, bad or malicious. I know we have 20 employees who has given it access to mail and calendar.

Looking at details in the Office Store, I can see the plugin gets read/write access to the current message. I assume this is something verified by Microsoft ? But when launching the app from within Outlook, it want me the authenticate, and to give consent for it to get much wider access than whatever Microsoft listed. That is full read/write access to mail and calendars and Send mail as user. One user gave consent to Boomerang to read/write mailbox settings (like mail forward rules etc).

So the delivery method / attack vector now includes the Microsoft Add-In store as well. A nice clean piece of code in Outlook, using the backend web service to maintain access and do the bad stuff should be easy to get delivered by Microsoft directly to the employees.

Since apps are hybrid (Outlook and Cloud), and the access tokens can be used from anywhere, there is really nothing Microsoft can do to efficiently screen the apps. They can only see what is delivered into the users Office, not what it does in the cloud. This is just how the cloud works.

I am scared - How to plug the hole

First you need to have your AzureAD administrator stop the hole. Prevent users from giving consent to any further applications without admin consent.This is easy, have him go to AzureAD, select "Enterprise applications", "User settings" in the Manage section, and make sure "Users can consent to apps accessing company data on their behalf" is set to No.

Don't look at the additional info hovering over the info sign. It is plain wrong. It says that 3rd party apps can only see the users profile data in your directory. But it should say that 3rd party app can access any data in Office 365 that the user can access - or close to it.

You can set up an approval process etc, so Microsoft has the plumping in place for those who changed the insecure default to secure.

All consent and permissions already granted are still active. See next section for cleanup.

How do I clean up as an employee ?

If you are an employee in an enterprise, you can go to https://portal.office.com/account/#apps and see which apps you have given some permission to access data on your behalf, and what apps Microsoft or the enterprise has given permission to access data on your behalf.Here you can revoke permissions for all the apps you have granted access to enterprise data. The details button does NOT let you review the granted permissions. It only displays a description the app designer has published, if any.

The only way to review the granted permissions is to withdraw the consent, sign in to the app, and then get the consent prompt once more.

I should be mentioned here, that in my experiments with assigning and withdrawing consent, I have ended up with a permanent File Read/Write permission granted to draw.io that I can not see anywhere. My user can not see the consent. The Admin can not see the app on my userID, and the Admin can not see my user on the app. BUT, we can see in the log when it logs in and gets access, and I can confirm it can read/write files. This is a bug.

How do I clean up as an administrator ?

The easiest way to get an overview of consents already granted by users is through the Microsoft Cloud App Security portal, which possible requires an E5 or AzureAD P2 license. https://portal.cloudappsecurity.comHere you can go to Investigate, OAuth apps. Microsoft list all external apps, with number of users, and granted permission level.

You can easily approve and block apps that already have user consent.

We have many employees using an app called "iOS Accounts" with High permission level. It can access mailboxes as the signed-in user. The publisher is listed as Apple Inc.

Then we can see some redirect URLs - This is where the access token is sent. And it is not sent to the internet, but to some URLs like com.apple.mobilemail://oauth-redirect. This is our best indicator that it is not accessing data from the cloud.

I am pretty sure this is all legal and this is access from iPhones. But even an expert might have difficulties judging if an app is good or not.

The good thing

There is one light in the darkness. All 3rd party apps has an app-ID that it uses to get access. So Microsoft has the master keys to revoke access for individual web apps if and when it has been figured out they are malicious.Even with a standard AzureAD license, you can see applications, but it is difficult to get an overview. You can click on an app like Amazon Alexa, and then Users&Groups, and you can see how many have granted access to that. But there are apps without users as well, and the list is very long. Alexa has access to Contacts and Calendars for the 2 employees who has given it consent.

The Microsoft response

I contacted Microsoft when I became aware of this huge risk to many companies, and that nobody was really aware.Microsoft told me to hold back on the release of the information, and do responsible disclosure.

Microsoft has spent the waiting time to create or update this guidance document - It is dated after I contacted them.

https://docs.microsoft.com/en-us/microsoft-365/security/office-365-security/detect-and-remediate-illicit-consent-grants

They also referred me to a document updated about a week before I contacted them, where they recommend to block user-consent to minimize the attack surface. So the recommendation is actually to change default settings - Or in other words, Microsoft ships things that does not follow their own recommendations.

https://docs.microsoft.com/en-us/azure/security/fundamentals/steps-secure-identity#block-end-user-consent

The weekly minitoring/discovery in the detect-and-remediate document is more or less the equivalent of recommending to run anti-virus software at least once per week. If the access is abused to install malware, and have users click on it, then once per say is not sufficient. And the fact that the events might take 24 hours to appear in the log is not good either. Even hours might be too late.

Conclusion

None of us knows which 3rd parties already have access to what data in our Office365 installations. Users has been handing that out for years. It is only by pure chance we have not seen all the hacker groups use this opportunity instead of Phishing, RDP hacking etc.All our users has completely forgotten they granted any access to any application. We have users who granted access years ago, and removed the app right after. But the permission grant is still there.

Microsoft is shipping Office365 / AzureAD with default settings that are NOT secure. This opens up for something easier and way better than phishing. Not getting the users password, but getting the users access tokens (which is not restricted by logon security), and being granted high permissions.

All 3rd parties can abuse permissions granted for one purpose to do other things. On purpose, or if they are hacked. The access token can be shared, together with the password/secret the app provider uses when talking to Microsoft. It can be traded like passwords, and used anywhere.

Microsoft should enable security by default (required by GDPR), and take the hit it will have on their ecosystem of Office365 app providers. In my opinion, EU should look into this. And all EU companies MUST look into this if they are storing personal data in the Office365 cloud - including O365 mail.

I see no good reason why Microsoft should permit employees to share enterprise data in Office365 without administrator consent by default. Microsoft knows it is enterprise data, and not the employees to do with as he or she wants. The consent is no excuse, except maybe in the USA where you can get everybody to sign away any responsibility.

Looking a bit further, I would like Microsoft to let the enterprise decide exactly what permissions the employee can grant to 3rd parties without administrator consent. Then we could open up for areas where our risk assessment says we have no PII (Person Identifiable Information) or sensitive data. It would also make sense to distinguish between apps running on the users device, where the user is signing in, and apps running on webservers elsewhere.

Bluemail supposedly only processes data on the users device - yet they get the token sent to bluemail.me, while Flow-E is a web app, where they are fetching data from Microsoft, processing it, and displaying it in the users browser - in near real-time. Here Flow-e are processing data. It might be PII, and thus require the enterprise to perform a risk assessment, and get a Data Processing Agreeemnt with the vendor. If Bluemail does what it says, and does not get and process data on their servers, then they might be acceptable to an enterprise without a Data Processing Agreement. But the fact that the token can be sent to their servers is enought to make me doubt.

Sample website

A sample "phishing" webpage (fully working) has been setup at https://easypdf.ltd/ - It promises functionality to the user but does nothing, except ask for lots of consents, and allows you to access your data afterwards.

Going directly to https://easypdf.ltd/microsoft/ allows you to come back at a later stage, and using your cookie, regain access to your account without reauthentication.

If you do use it, please cleanup your consent on your user account afterwards. The server might become hacked, and the hacker will be able to reuse your access token, together with the appID and password.

Great article, and presumably a normal scenario in many companies, unfortunately

ReplyDelete